A picture may be worth a thousand words, but the inverse is also true: A word is worth a thousand pictures. If I say “bear,” you might picture a grizzly or a black bear, a polar bear, a panda bear, a burden of weight or stress (“more than I can bear”), or even a cartoon or plush toy (like the Care Bears).

This slippery, imprecise quality of words was a serious concern for scientists in the mid-17th century. In the midst of the Scientific Revolution, natural philosophers (as they were called at the time) were still figuring out which methodological practices could be considered reliable, and visual observation was considered the ultimate in reliability. Images were thought to show the truth of nature, or what the U.K. Royal Society historian Thomas Sprat called “a bare knowledge of things.”

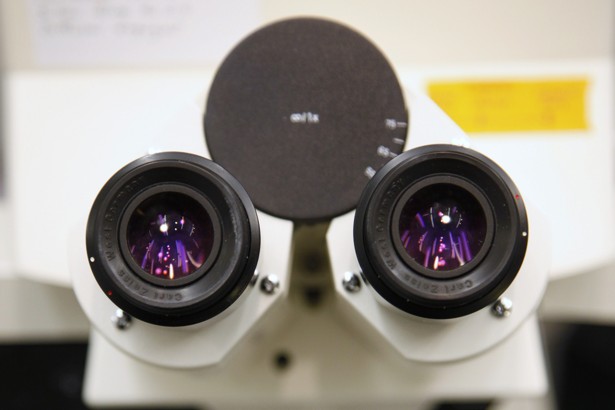

Still, 17th-century scientists understood the limitations of the human senses. Our eyes can only do so much observing on their own; they need lenses to see things far away, and to see the minute details of things up close. While some natural philosophers were skeptical that magnification could accurately present images of nature without problematic distortion, others, like Robert Hooke, were convinced that the microscope could unlock countless mysteries of the universe. Through the efforts of Hooke and his colleagues at the Royal Society (the same U.K. academy of science that still flourishes today), the microscope took on a central role in epistemology. It didn’t just change what we could know by seeing; it changed the way we thought about what it means to know.

There’s some debate over who invented the compound microscope, but many credit the Dutch eyeglass maker Zacharias Jansen and his father Hans with creating the first such device in the 1590s. At any rate, the timing makes sense: By the turn of the 16th century, the use of glass lenses for vision correction was widespread in Europe, laying the groundwork for the microscope’s use of overlapping lenses for increased magnification.

As early scientists worked to refine the microscope, adding and reconfiguring lenses to increase magnification, they also had to contend with the distortion that came with using multiple lenses. Distortion occurs when light of different colors passes through a lens, because different wavelengths will refract at different angles. This produces a situation called chromatic aberration, when the lens can’t focus all colors of light to the same point of convergence. White light passing through an unsuitable lens, for example, will leave specks of color around the object being viewed. Accordingly, microscopists in the 17th and 18th centuries experimented with various types of glass for their lenses alongside the mechanics of the microscopes themselves, working to increase magnification without losing image quality.

But the promise that Hooke saw in the technology began to fade by the turn of the 18th century as microscope development stagnated. The scientific community, Hooke complained, believed there was “no more to be done” with the microscope. The statement was a bit melodramatic—the microscope had gained traction as a scientific tool—but it contained a grain of truth: Hooke and his colleagues at the Royal Society hadn’t fully convinced the scientific community of the device’s potential.

Today, of course, we recognize microscopy as essential to research in medicine, the life sciences, chemistry, and physics. The limitations of optic or light microscopes have given way to the power of electron microscopes, which enable us to see things thousands of times smaller than a wavelength of light.

Despite the achievements of modern microscopy, “seeing is believing” has a different flavor than it did centuries ago. Cleanly magnified images are no longer the end goal of scientific pursuit; instead, it’s the data into which we translate such images. The microscope may now be a symbol of scientific practice, but data has become the real currency of knowledge, both scientific and otherwise. Microscopy and its related observational methods have given way to “data science” as the most reliable way of knowing. Nevertheless, the rise of the microscope in Hooke’s era tells us quite a lot about the role of data in ours.

In 1665, Hooke published Micrographia, a work that included striking illustrations of what he saw under his microscope—images the likes of which most people had never seen before. Among the drawings of pieces of flint, cork, and foliage, Micrographia’s most compelling illustrations were also the most unsettling: close-ups of insect eyes, the spiny legs of fleas and mites, the stingers of bees. Hooke’s verbal descriptions of the images hardly mitigated their striking visual effect. As he wrote of the bee’s stinger, for example:

The top of the Sting or Dagger is very easily thrust into an Animal's body … By an alternate and successive retracting and emitting of the Sting in and out of the sheath, the little enraged creature by degrees makes his revengeful weapon pierce the toughest and thickest Hides of his enemies …

The vivid language of Hooke’s descriptions—stingers as “daggers” and bees as “enraged creatures” wielding “revengeful weapons”—was a type of distortion in it own right. But in some ways, Hooke undercut his own belief that the image was more reliable than the word: He was known to tinker with the scale of his illustrations, adjust the orientation of the images for illustration, and cherry-pick certain details to focus on. Micrographia portrayed its findings as the visual truths of nature, untainted by the duplicitousness of words, but it was shaped by subjective choices.

Thomas Sprat’s History of the Royal Society, published two years after Micrographia, continued Hooke’s argument that showing was more powerful than telling. Sprat took the ancient Greek natural philosophers to task for being “men of hot, earnest, and hasty minds” who “loved to make sudden conclusions” and to “convince their hearers by argument” instead of “attend[ing] with sufficient patience the labor of experiments.”

Both Hooke’s emphasis on the visual and Sprat’s elevation of observation over argument persist in modern times. Consider, for example, Nate Silver’s argument for data journalism in a 2014 interview with New York magazine:

They [conventional journalists and commentators] don’t permit a lot of complexity in their thinking. They pull threads together from very weak evidence and draw grand conclusions based on them … They’re not really evaluating the data as it comes in, not doing a lot of thinking.

Once again, words are “weak evidence,” a threat to the perceived objectivity of data. Like Hooke and Sprat advocating for the reliability of the image, Silver advocates for the reliability of the number, or of data, to show us the truth.

As it happens, the word “data”—from the Latin for “that which is given”—entered the English language in the 17th century, around the time that the microscope was born. Hooke and Sprat’s ideas about the power of images seem to have an echo in Silver’s ideas about big data: For the 17th-century scientists, the image was true because it could speak for itself without speaking (as Hooke writes in his description of a flea: “there are many other particulars, which, being more obvious, and affording no great matter of information, I shall pass by, and refer the Reader to the Figure…”). The image was reliable because it was objective, unadulterated by words. And the image was a passive object that could be dispassionately evaluated without talking back.

Today we project these same qualities onto data, preferring to show our data rather than to explain it verbally—hence the growing fields of data visualization and information design. And yet, as Hooke and Sprat remind us, nothing really speaks for itself without words. Subjectivity lurks behind every chart, if only in the choices that led to a study of this thing over that thing, the inclusion of this image or that, this focal point or the other. All of these things require explanation. The rise of “big data” today is partly a function of computing technologies that enhanced our data-collecting and processing capabilities, but the microscope had long since taught us that objectivity is a myth.

This article appears courtesy of Object Lessons.