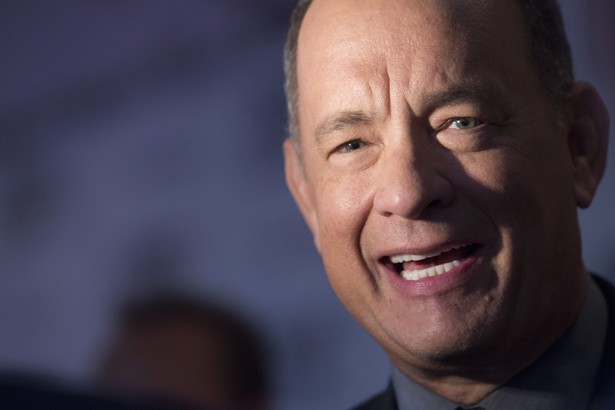

In the three decades that Tom Hanks has been a leading man in Hollywood, his appearance has changed dramatically. His hair has gone from dark shaggy curls to buzz cuts to salt-and-pepper gray, he’s undergone stark fluctuations in weight for different roles, and he’s transformed from a baby-faced twenty-something into a twinkly-eyed almost 60.

Yet Hanks has always retained an essential Tom Hanksiness. What is it, anyway, that makes people look like themselves?

That’s the question at the heart of a body of research in which computer scientists are using machines to assess huge photo databases of human faces, then reconstructing 3-D simulations of that person’s likeness. The technique involves algorithms designed to map 49 points on a person’s face, then chart how those points change depending on facial expression.

The end result is an uncanny representation of the person, and not just that: These models can be animated in a ventriloquist-like manner, so that likenesses of people look as though they’re saying the words coming out of someone else’s mouth—all the while retaining their own mannerisms and expressions.

The best way to understand all this is to actually see it happen. Here’s a video showing former president George W. Bush actually speaking, and depictions of what several other famous individuals—Tom Hanks, Hillary Clinton, Barack Obama, Neil Patrick Harris, and others—would look like delivering the same message.

Here’s another example—skip ahead to around the 1-minute mark, which is where things get really surreal:

So, why would scientists want to do this—other than to show off their simultaneously dazzling and mega-creepy computer skills?

“One of the applications is in augmented reality and virtual reality,” said Ira Kemelmacher-Shlizerman, an assistant professor of computer engineering at the University of Washington, and one of the authors of a paper that is set to be presented at the International Conference on Computer Vision this month. “We’re not there yet, but our research leads to that.”

In other words, imagine if a video call didn’t just feature a person’s voice and two-dimensional face on a screen—but instead brought a projection of that person into the room with you. “What if you could do that, just to take it forward a few steps and make it more realistic? Make it like the person is in front of you in three dimensions, so you can see the actual expressions in detail,” Kemelmacher-Shlizerman told me.

There may also be applications in Hollywood. When the actor Brad Pitt played Benjamin Button in the story of a man who ages in reverse, filmmakers created his aging effect by coding his face in great detail in a lab setting. “The expressions were later used to create a personalized blend shape model and transferred to an artist created sculpture of an older version of him,” Kemelmacher-Shlizerman and her co-authors wrote in their paper. “This approach produces amazing results, however, [it] requires actor’s active participation and takes months to execute.”

What's special about the latest work by Kemelmacher-Shlizerman and her team is that the modeling comes from pre-existing photo collections—hence the focus on Hanks, who has been photographed a lot over the years. Other researchers in this space have relied on specific environments and lighting levels for their subjects in order to make realistic models.

“In the optimal setup, you’d say, ‘Let’s go to a lab, put 20 cameras around the room, decide on some lighting, and constrain all sorts of environmental conditions,” Kemelmacher-Shlizerman said. “The big breakthrough in our research is we’re doing it in completely unconstrained environments unlike other research in this space.”

The algorithm Kemelmacher-Shlizerman and her team used assesses differences in lighting across huge troves of photographs by looking at changes in texture and various shadows on a person’s face. In one image, a shadow might be cast under a person’s nose, in another image, a shadow might fall under that person’s eyes. “We can use the shadowing to estimate the shape of a person’s face,” she said.

The purpose of the puppeteering effect, she said, is to see how good of a job they did in actually capturing a person’s likeness. (She acknowledged that this technology may open the door to impersonation without a subject’s consent, but says the same mechanism that creates a model in the first place could be used to “see if it’s fake or not.”)

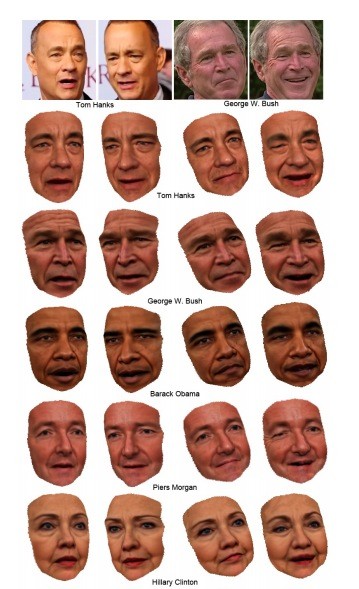

In the image below, researchers captured facial expressions by Tom Hanks and George W. Bush from YouTube videos, then projected those expressions on to models of other people’s faces. The top row shows the expressions being mimicked, and the subsequent rows show what other people look like making those faces—including Bush and Hanks swapping expressions, along with Barack Obama, Piers Morgan, and Hillary Clinton making the original expressions by Hanks and Bush.

What all this disturbing face-sharing demonstrates to computer scientists is that, if they’ve modeled a person’s likeness well enough, Barack Obama still looks like Barack Obama, even when he’s making a George W. Bush face.

“If we can produce a realistic model after transferring expressions, it means that we captured something,” Kemelmacher-Shlizerman told me. “Something in the [data] flow that seems like it captures that person’s identity. We cannot say yet that because George Bush raises his eyebrows some particular way, this is what makes George Bush George Bush.”

But there are otherwise unseen details that this sort of work reveals, like how the texture and even color of a person’s face changes depending on expression. “You can see the distinguishing features, even if you don’t pinpoint and say, ‘This is what makes this person look like themselves,’” Kemelmacher-Shlizerman said.

As for how all this has changed the way Kemelmacher-Shlizerman thinks about human facial expressions overall, “I’m attuned to details more,” she said. “Perhaps more than I was before.”